Two talks from string_data 2020

Haggai Maron (NVIDIA Research) and Sergei Gukov (CalTech), 12:00 EDTHaggai Maron

Title: Leveraging Permutation Group Symmetries for the design of Equivariant Neural Networks

Abstract: Learning of irregular data, such as sets and graphs, is a prominent research direction that has received considerable attention in the last few years. The main challenge that arises is which architectures should be used for such data types. I will present a general framework for designing network architectures for irregular data types that adhere to permutation group symmetries. In the first part of the talk, we will see that these architectures can be implemented using a simple parameter-sharing scheme. We will then demonstrate the applicability of the framework by devising neural architectures for two widely used irregular data types: (i) Graphs and hyper-graphs and (ii) Sets of structured elements.

Sergei Gukov

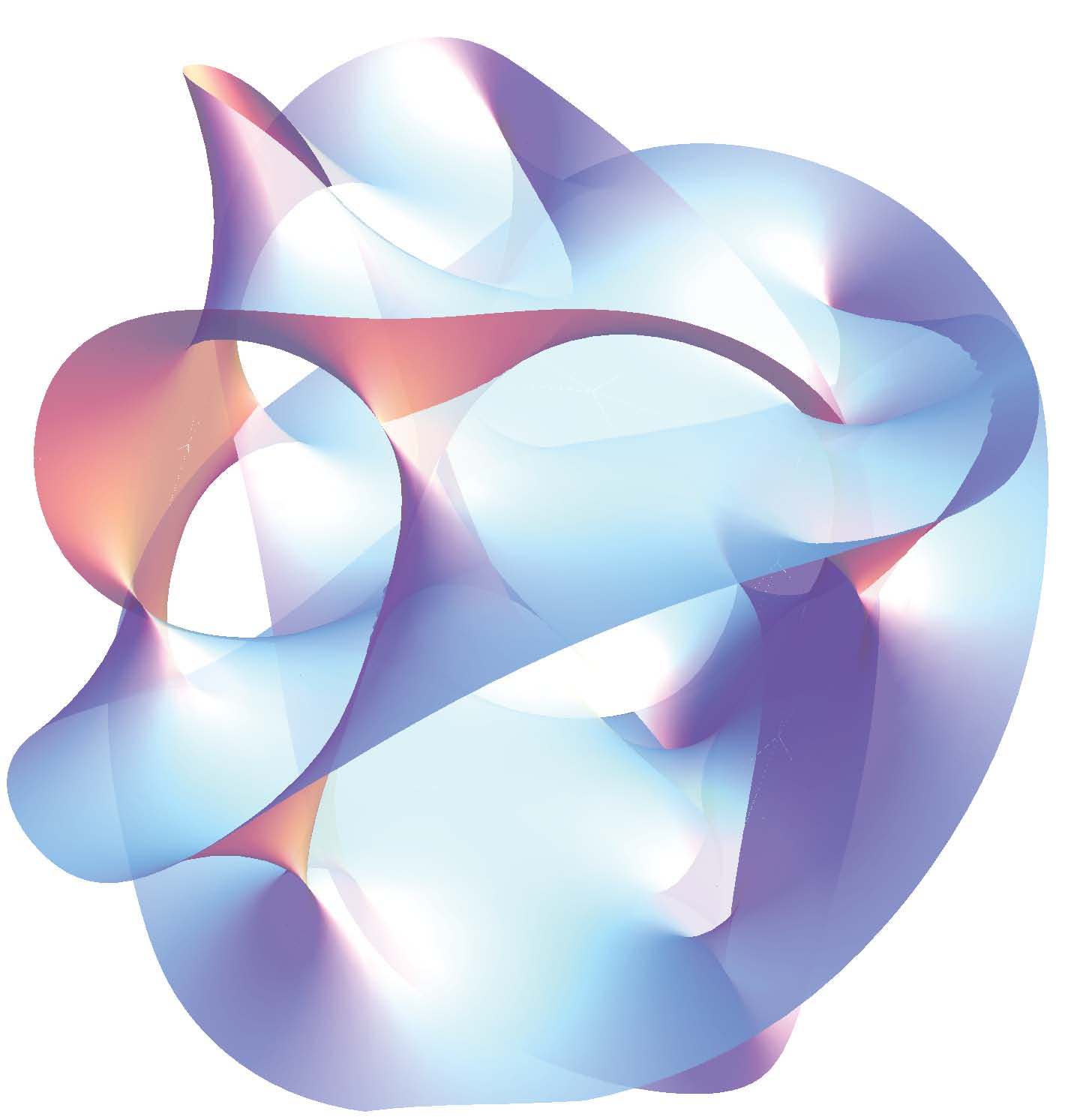

Title: Learning to Unknot

Abstract: We will apply the tools of Natural Language Processing (NLP) to problems in low-dimensional topology, some of which have direct applications to the smooth 4-dimensional Poincare conjecture. We will tackle the UNKNOT decision problem and discuss how reinforcement learning (RL) can find sequences of Markov moves and braid relations that simplify knots and can identify unknots by explicitly giving the sequence of unknotting actions. Based on recent work with James Halverson, Fabian Ruehle, and Piotr Sulkowski.