Euclidean Neural Networks: Adventures in learning with 3D geometry and geometric tensors.

Tess Smidt, Lawrence Berkeley Laboratory, 12:00 EDTAbstract: Atomic systems (molecules, crystals, proteins, nanoclusters, etc.) are naturally represented by a set of coordinates in 3D space labeled by atom type. This is a challenging representation to use for neural networks because the coordinates are sensitive to 3D rotations and translations and there is no canonical orientation or position for these systems. One of the motivations for incorporating symmetry into machine learning models on 3D data is to eliminate the need for data augmentation – the 500-fold increase in brute-force training necessary for a model to learn 3D patterns in arbitrary orientations.

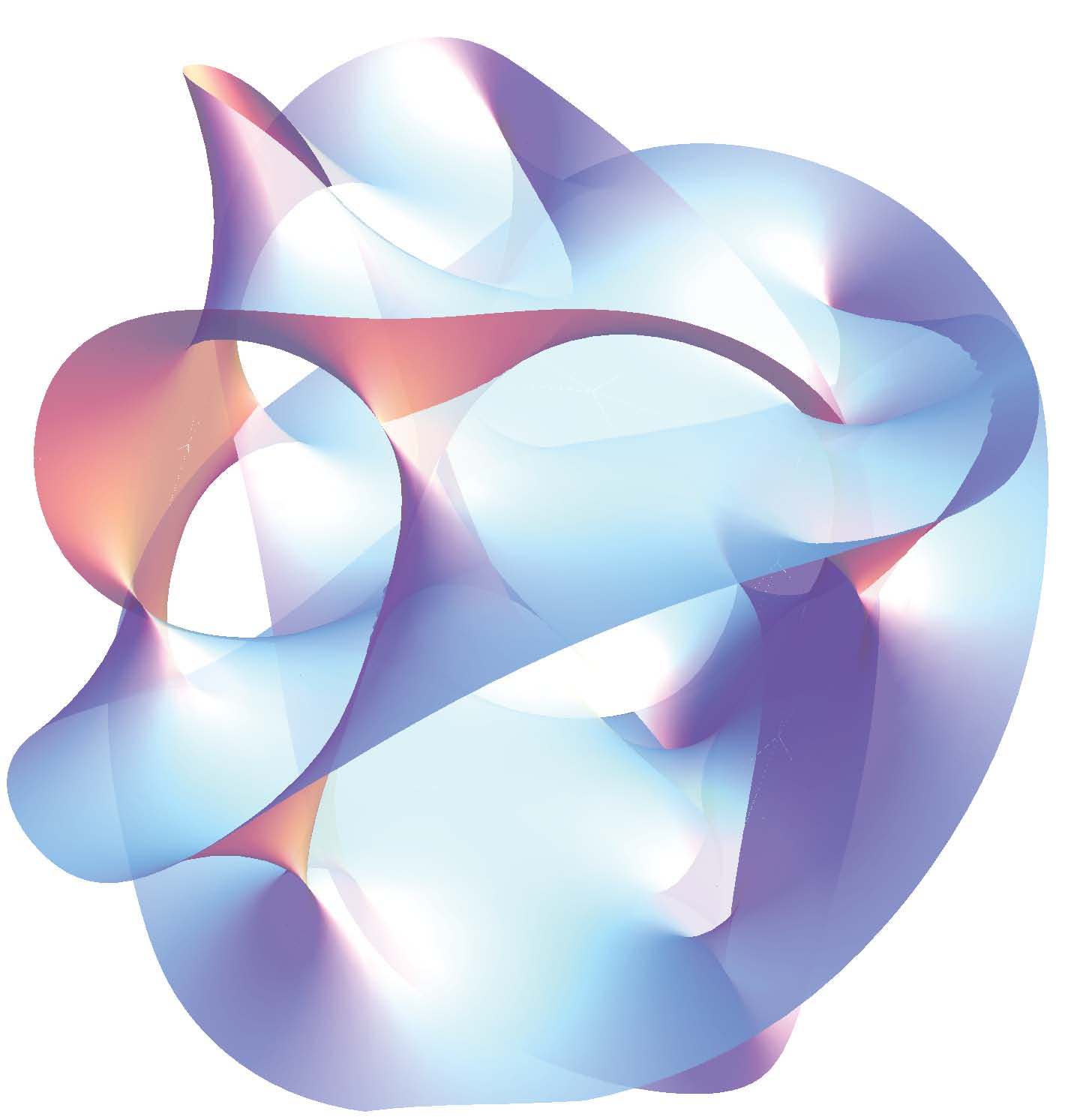

Most symmetry-aware machine learning models in the physical sciences avoid augmentation through invariance, throwing away coordinate systems altogether. But this comes at a price; many of the rich consequences of Euclidean symmetry are lost: geometric tensors, point groups, space groups, degeneracy, atomic orbitals, etc.

We present a general neural network architecture that faithfully treats the equivariance of physical systems and naturally handles 3D geometry and operates on the scalar, vector, and tensor fields that characterize them. Our networks are locally equivariant to 3D rotations and translations at every layer. In this talk, we describe how the networks achieves these equivariances and demonstrate the capabilities of our network using simple tasks. We provide concrete coding examples for how to build these models using e3nn: a modular open-source PyTorch framework for Euclidean Neural Networks (https://e3nn.org).